by Dumitru Vasilescu and Alexandru Oprunenco

Major funding experiment under way! Here is everything you need to know

March 2, 2016

As development practitioners, we often wonder how effective our interventions are and what we could have done differently.

The burden on our shoulders is even higher when we are testing a prospective government policy that, once scaled up nationally, might have considerable social impact and would affect public purse.

Evaluation of the public policies or development programs is a science in itself. These days, doing Randomize Control Trials has become part of a major trend.

Recently, we also jumped on the bandwagon to help answer the following question:

When it comes to small-medium enterprises (SMEs), should the government get involved by providing additional funding or should it better stay away or maybe look for other ways to support?

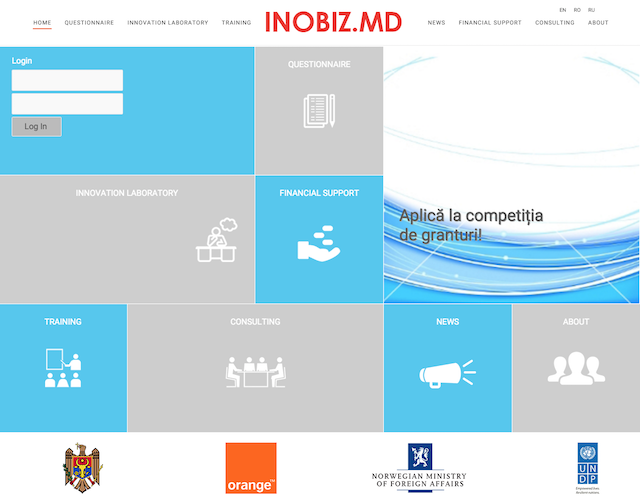

Here’s the problem. The National Innovation Strategy is thinking about setting up a vouchers program for innovative SMEs. But they are wondering whether giving them a flexible access to otherwise expensive credit would help foster innovation or not.

So we decided to pilot a matching Innovation Award scheme and see if providing matching financial support to companies actually helps or hinders innovation: one group of companies would receive funding while another one would not.

Once we evaluate the results, the government will be able to fine-tune and bring the program (if it works at all!) up to scale.

Sound good so far?

We collaborated with our good partners from the Behavioural Insights Team, who quite recently evaluated their own voucher for SMEs program.

Our program has three phases:

- Setting up the Innovation Challenge: In summer-fall 2015, we launched an Innovation Challenge

- Setting up the trial: In Nov-Dec 2015, all 104 technically winning proposals were randomized, with 63 placed into the treatment group (with matching awards of up to 8,000 USD) and the rest to the control group (no financial award).

- Evaluation: 12-18 months from now, we will have completed our evaluation. Taking revenue and employment as outcome measures, we hope to use the difference between both groups of statistically similar companies to see what, if any, impact the matching award scheme had on the companies.

Treading uncharted waters…

So far so good, but we have run into some challenges nonetheless. Let’s take a closer look:

Sample size: Generally, the bigger the size of the statistically similar companies, the better. While we pinned our initial hopes on 200 companies, we had to settle with 104, and that left us at the behest of statistical science.We will be walking thin ice here.

Data: We need to be able to draw on needed data available at the national statistical office. Yet farmers and individual companies are not obliged to report to the statistical office.Thus, to compensate, we will be backing up our measurements with data from the tax office. We will further use monitoring visits and a mid-term survey with self-reported measures of business confidence to see how things evolve.

Ethical Questions: Denying awards to successful applicants is not something our conscience or the “control” companies take lightly. After all, we still need their cooperation on data disclosure and progress monitoring.Therefore, we inserted some clauses in the Challenge Manual that explains the trial. Ultimately, the pool of funding was limited anyway so we had to use some allocation mechanism.

So begins our major experiment. Watch this space for news of our progress!

Locations

Locations